The risks of gain-of-function research on pandemic potential pathogens such as SARS and MERS outweigh the benefits. Greater oversight of biosafety, biosecurity, and biorisk management in laboratories must be done by an independent national agency that doesn’t perform or fund research.

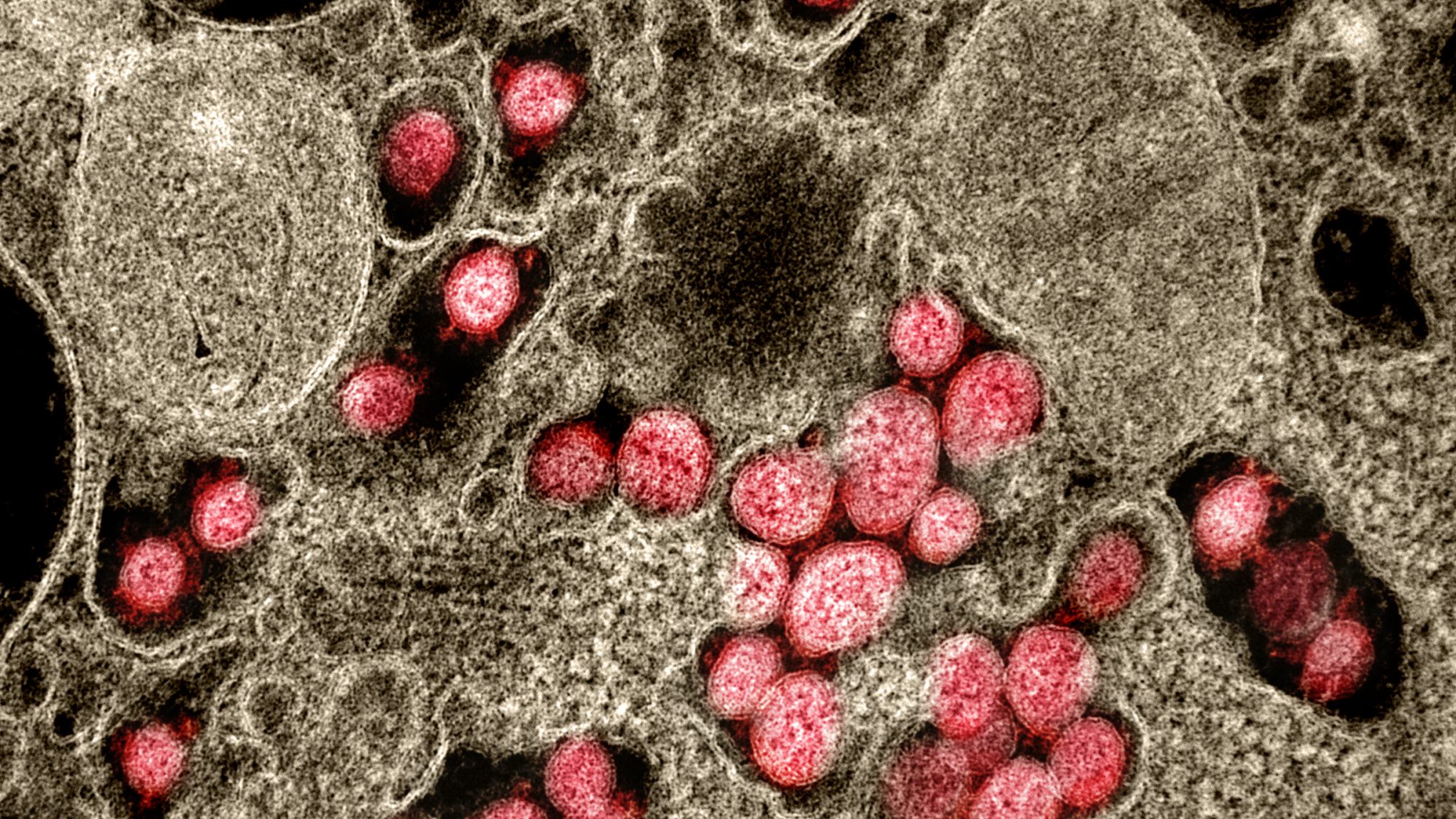

Like Icarus flying too close to the sun, some scientists working in laboratories have been pushing the fates by creating pathogens (i.e., microbes that make people sick) that are more dangerous than those occurring in nature. Other scientists and policy experts, myself included, have joined forces to call for improved oversight, and, in some cases, the banning of “gain-of-function” research on pandemic potential pathogens also called “enhanced potential pandemic pathogens research” (ePPP). Gain-of-function research involves giving microbes such as bacteria and viruses enhanced capabilities that they might not normally possess in nature. This research currently receives almost no national or international oversight. Not all gain-of-function research is dangerous—for example, turning harmless bacteria into insulin-making machines is beneficial and cost-effective for treating diabetes and most biomedical research has resulted in substantial improvements in medicine and public health. However, the subfraction of gain-of-function research that enhances the risks posed by pandemic potential pathogens such as avian influenza (H5N1) virus or SARS-CoV deserves closer scrutiny and oversight.

With a case fatality rate of approximately 56 percent, the H5N1 avian influenza virus is much deadlier than SARS-CoV-2, which has an estimated case fatality rate below 2 percent. The H5N1 avian influenza virus first emerged in Hong Kong in 1997 from infected birds, but it was unable to spread readily from mammal-to-mammal.

Once a pathogen gains the ability to spread easily from mammal-to-mammal, the risks of it spreading to humans increases. Enter Ron Fouchier, a virologist from Erasmus Medical Center in the Netherlands. In 2011, he and his colleagues decided to give the H5N1 avian influenza virus the enhanced capability of airborne spread between mammals. Fouchier wanted to see what mutations the virus might need to acquire to start a pandemic. He chose ferrets as the experimental animal, because they are susceptible to influenza viruses and develop influenza respiratory disease like humans. The experiments were performed in a Biosafety Level 3 (BSL-3) laboratory, one step below the highest containment laboratories (BSL 4) that feature containment rooms and spacesuits. After introducing mutations into the virus, Fouchier performed serial passages: a form of selective breeding of the virus in ferrets to obtain novel mutant viruses that are efficiently transmitted by aerosol from mammal to mammal.

The most prevalent reason asserted for conducting gain-of-function research on pandemic potential pathogens is to be able to predict future pandemics. In other words, by creating novel pandemic potential pathogens in laboratories, scientists like Fouchier would be able to recognize them in the wild before they spilled over from animals into humans.

That’s the theoretical argument, anyway.

In practice, their reasoning is flawed. To more clearly illustrate this analysis, let’s examine weather forecasting. Predicting hurricanes is a purely observational exercise that implements satellite data. Scientists don’t manipulate clouds to see what conditions are needed to start hurricanes. They use atmospheric and climate models instead. In a similar fashion, scientists could employ artificial intelligence and machine learning tools to predict viral evolution instead of manipulating viruses in laboratories. This entails that risky gain-of-function research on pandemic potential pathogens is not a necessity in predicting future pandemics.

Concerns about risky research prompted the National Academy of Sciences (NAS) to issue a report in 2004 titled “Biotechnology Research in an Age of Terrorism,” listing seven “experiments of concern,” recognized as the “seven deadly sins,” that should not be pursued if they could create pathogens that are not already present in nature.

These experiments include:

- Demonstrating how to make a vaccine ineffective

- Developing a pathogen’s resistance to antibiotics or antiviral agents

- Enhancing a pathogen’s virulence (i.e., lethality) or making a non-lethal microbe lethal

- Increasing the transmissibility of a pathogen (e.g., making a non-airborne pathogen airborne)

- Altering the host range of a pathogen by increasing the number of species it can infect

- Enabling a pathogen to evade diagnostic testing

- Enabling a biological agent or toxin to be weaponized

The avian influenza (H5N1) virus studies involving ferrets involved experiments 4 and 5 in the list. According to the NAS, these experiments should not have been done.

In the case of SARS-CoV-2, the causative agent of the COVID-19 pandemic, the jury is still out regarding how the virus developed and how it spread from animals to humans. But understanding the origins of the virus is imperative to the development of effective policies and procedures that can reduce the likelihood of such a catastrophe from happening again. Both SARS and MERS, which emerged in 2003 and 2012, respectively, had extensive clinical specimen evidence (i.e., bodily fluids) from animals and humans demonstrating natural spillover events. Clinical specimen evidence includes either the virus itself or antibodies that bodies make to attack the virus, like microscopic missiles.

For example, palm civets (i.e., exotic mammals eaten as delicacies) sold at an animal market in Guangdong, China were found to harbor viruses that were 99.8 percent identical to the SARS virus found in humans; and 80 percent of palm civets tested positive with antibodies to the SARS virus. Blood tests of almost 800 people in the Guangdong Province found that individuals who traded palm civets had the highest antibody positivity rates to SARS compared to everyone else.

Similarly with MERS, archived blood specimens from dromedary camels dating back decades throughout the Middle East, parts of Asia, and North and East Africa tested positive for MERS antibodies, providing the evidence that the virus had been circulating in the animals for a long time. From 2012 to 2013, a study of over 10,000 healthy adults from all thirteen provinces of Saudi Arabia found MERS antibodies in 0.15 percent of the samples. People who worked with camels had much higher rates of MERS antibodies compared to the general population. Specifically, camel shepherds had MERS antibody rates fifteen times higher, and for slaughterhouse workers, a whopping twenty-three times higher rate than the public.

In other words, the key evidence for natural spillover for both SARS and MERS was clinical specimens from occupational exposures. Working with animals that harbor zoonotic pathogens (microbes that can spread from animals to humans) increased spillover risk in the animal workers. A One Health approach integrating human, animal, and environmental surveillance is essential for monitoring future natural spillover events.

In contrast to SARS and MERS, neither the SARS-CoV-2 virus nor antibodies to the virus have been reported in animals or animal workers. No studies involving clinical specimens have been published in the medical literature that fulfills the criteria for a natural spillover event. In early 2020, George Gao, a former director of the Chinese Center for Disease Control and Prevention, and his colleagues conducted a non-peer reviewed study that found 0 out of 457 clinical specimens taken from 18 species of animals in the live animal market in Wuhan contained the virus. The Chinese conducted surveys of thousands of people to assess the rates of antibody prevalence to SARS-CoV-2 in the general population. Unfortunately, none of these studies included occupational data, eliminating a critical factor in determining natural spillover risk. In addition, no studies have been published examining SARS-CoV-2 antibody rates in laboratory workers at the Wuhan Institute of Virology (WIV). The window to assess differences in rates between the laboratory workers and the public when such differences would still exist closed in early 2020.

The public record, however, indicates that SARS-CoV-2 was genetically manipulated at the Wuhan Institute of Virology. In 2016–17, researchers at WIV constructed novel chimeric SARS-related coronaviruses that combined the spike genes of different SARS-related coronaviruses. A chimera possesses genetic material from two different organisms. The spike protein of SARS-CoV-2, which is used like a key to enter cells, has a highly unusual feature called a “furin cleavage site” that is not present in the more than 200 other known SARS-related (e.g., Sarbeco) coronaviruses, making it highly infectious. While furin cleavage sites occur naturally in many other coronaviruses, the SARS-CoV-2 furin cleavage site has a particularly unique sequence of amino acids. This feature has been the subject of much debate with some scientists demanding a full investigation into possible laboratory origins, while others stress that there is no evidence.

Science must be transparent to maintain its legitimacy. An immense debate such as this warrants a full investigation into the virus’ origins, especially since there is no compelling evidence for a natural spillover event. Stonewalling such an investigation engenders suspicion.

To prevent future catastrophic pandemics, my colleagues and I propose improvements in protections at national and international levels in biosafety, biosecurity, and biorisk management. Biosafety and biosecurity protect against accidental and deliberate release of pathogens in laboratories, respectively. In the United States there are currently no regulations, only guidelines pertaining to biosafety. There is a law for biosecurity called the Select Agent Rule, but this legislation only applies to several dozen human, livestock, and crop pathogens and biological toxins that are considered high risk for bioweapon use.

Biorisk management involves risk-benefit assessments of proposed high-risk research on dangerous pathogens that might qualify as one of the “seven experiments of concern.” From 2014 to 2016, the US government imposed a pause in federal funding for gain-of-function research on SARS, MERS, and influenza viruses. As with biosafety and biosecurity, the same federal agencies that perform and fund gain-of-function research of pandemic potential pathogens are also in charge of biorisk management. An inherent conflict of interest exists, and the system is opaque without public scrutiny or input.

In all three cases—biosafety, biosecurity, and biorisk management—oversight must be conducted by an independent national agency that does not perform or fund research. Oversight regulations should be transparent, enforced, and harmonized at the international level. Given the uncertainty surrounding the origins of SARS-CoV-2, it is prudent to cover all bases in developing policies to prevent another catastrophic COVID-19 pandemic.

. . .

Laura H. Kahn is a physician, author, educator, and consultant. For almost twenty years she was a research scholar at the Program in Science and Global Security at Princeton University. She is currently completing a book using a One Health framework to examine coronaviruses.

Image Credits: Wikimedia, NIAID Integrated Research Facility